How I used machine learning to build a searchable Kanuri-English database.

Here is a bit of a back story for context.

My dad sent me a PDF of a Kanuri to English dictionary. The document is "A Dictionary of Manga, a Kanuri language of Eastern Niger and NE Nigeria" by Kevin Jarrett.

I loved the idea, and it is very handy to have... when you know the word in Kanuri and want the definition in English.

However, I was facing an issue: I wanted to translate words from English to Kanuri. Therefore, I decided to create a searchable database where I could just enter a word in English and I would get the translation in Kanuri.

Here is my little one-week journey toward it.

How to extract the PDF content and save them in a Database?

On January 28th, I decided to open the PDF and extract the words from it. I decided to use Python for it, and I used the library PyPDF2. However, since the PDF document that I was attempting to open is a binary file, the output was binary-encoded.

import PyPDF2

# i created a one-page of the dictionary for test purposes so i don't have to browse the 160 pages.

pdfFile = open('dictionary_one_page.pdf', 'rb')

pdfReader = PyPDF2.PdfFileReader(pdfFile)

pages = pdfReader.getPage(0)

print(pages.extractText())

pdfFile.close()It would be a pain to open it and decode it. Therefore, I decided to think about another more efficient method to do that. I gave it a thought for a couple days.

After sleeping on it for couple of days I decided to approach it in a different way. I came up with a better solution: use OCR. OCR, or Optical Character Recognition. OCR (in this context) is the electronic translation of photos of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a picture of a document.

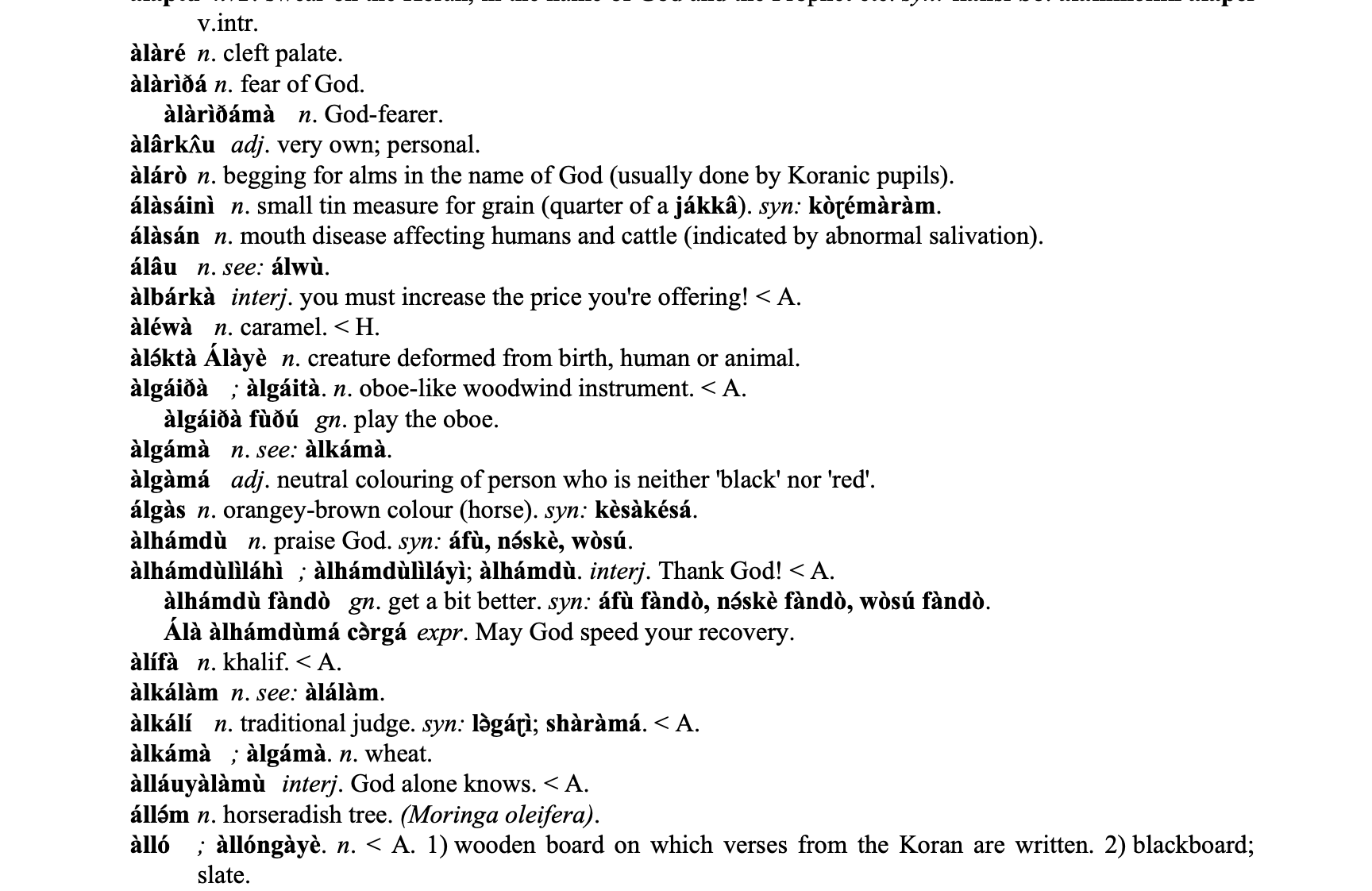

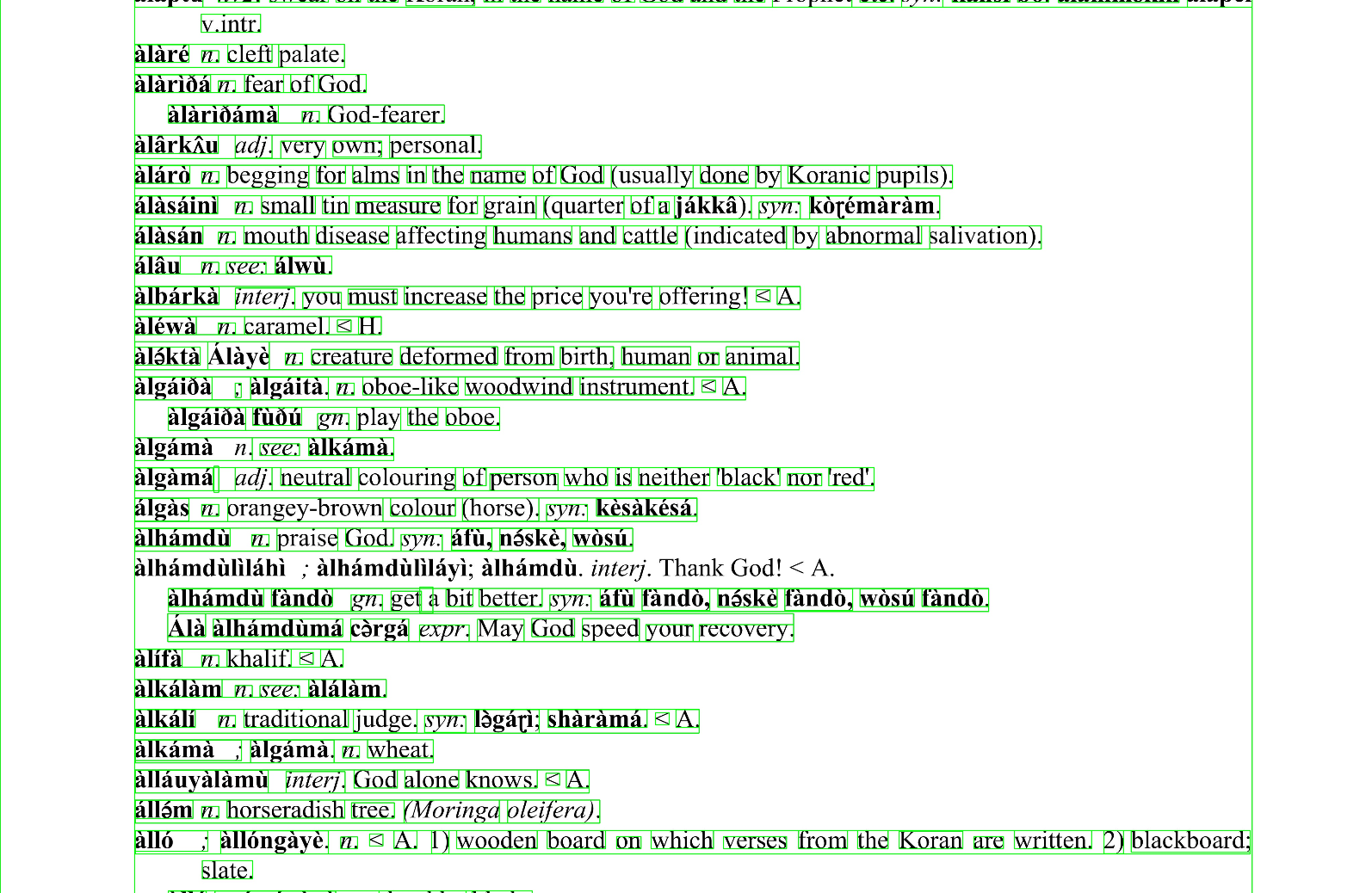

For instance, below is a page of the PDF document.

Basically, the computer reads the document, deciphers characters and converts everything into a text file. BUT, we cannot just do OCR on a PDF file. I then decided to convert the PDF file into an image (each page corresponds to an image). The PDF is roughly 160 pages longs (minus introductions).

So, now this was the easy part.

The not-so-easy part is to find a way to accurately separate words and their meaning in English.

The first method I thought of was using stop characters that they used in order to separate words and their English meanings "n.", "2nv", etc... however this method is limited when we see that some words do not have these, for example.

Another method I found, and that I ended up keeping for this phase, is to take each line and turn them into an array of words, for the separation, I used a simple findall() with python's regex (re), the regex is this one:

`r’\b\w+\b’`it grabs all the words and strips out the punctuations.

When this is done, I used NLTK’s Corpus module, Words; this is used to find all words in English.

I then create a string for words in English, and another string for the words that are not in English, they are then in Kanuri (obviously).

Each is then passed to a dictionary that will be stored in a JSON format (maybe will store them in an actual database later).

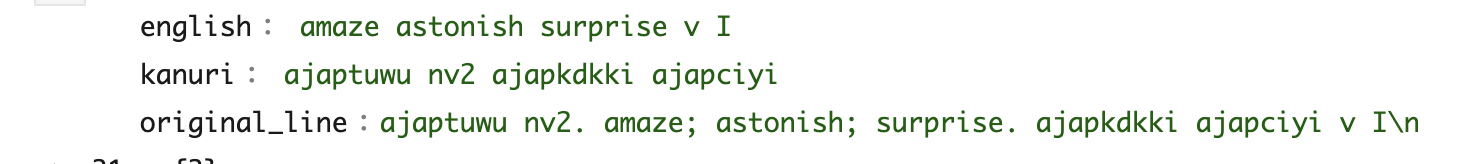

When this is done, we end up with something like this:

The JSON has 3 properties: English, Kanuri, and the original line.

As you can see, some work has to be done at stripping down the content. But this is a good start. I make sure to keep the original line in case I later find a better way to clean everything up so the English value and the Kanuri values can be updated—maybe manually.

Building the web app

Now that I found a way to extract and clean the data, I decided to build a web app that people can use to search words and expressions. Since I started by using python, and python already has a SQLite module implemented, I decided to use that to store the data in.

As for the web app, since I used python in order to extract and classify the words, my first choice was to use Flask and use it for the API. As I started, I had some more ideas & functionalities for the platform. And since I did not want to spend ages building the web app, I decided to use Laravel instead (I am more familiar with Laravel than Flask).

Though most of the data analysis and processing will be done in Python, the user interface and API will be made using Laravel and PHP 8.

Laravel and Python both have SQLite modules built in, therefore it will be very easy to use both technologies together.

(If requested, I will go over that later in more detail)

Classify Kanuri/English

As stated above, one of the problems was to classify some of the words between Kanuri and English. One method that I came across was to use NLTK's corpus. However, it showed some limits when it comes to single characters to three characters words.

I decided then to try to apply a bit of machine learning. I then wrote a linear regression classifier for this purpose using PyTorch.

How did I do that?

The AI/ML section is relatively long to explain and may eventually need a longer post in order to go over it. So I will just summarize here. If requested, I will write an entire post on it and probably share the source code of my model, training dataset and classifier.

Well, it was easy but tedious---it took me about 2 days. I had to handpick 260 expressions/words (10 for each letter of the alphabet) and then write them properly (without complex accents) and classify them as Kanuri. Because Kanuri words have a specific syntax with a lot of accents, it is kind of easy to differentiate them from English words.

Why 10? Why 260? Because Kanuri words have a very complex syntax. Between accents, apostrophes, fricatives, and spaces that mean different things; I had to build a model that is versatile and that would not take me forever to handpick relevant samples.

I then took all my 9.3k words in the classifier (Kanuri and English strings) and compared them against the 200 samples that I used before.

The classifier then spits out an array with Kanuri and English.

Which worked very well (80% accuracy vs. ~60% for the method using NLTK's Corpus).

The database is available at https://kanuri.info. More manual processing will be made in order to clean out the outputs.